I thought this was interesting and worth sharing, since I wasn't able to find info on it online: I ran the profiler on my watch when I compiled with -O 2pz and with -O 3pz, and 2pz actually was faster/more efficient. That made me curious to try with -O 1pz, and it was slightly more efficient than -O 3pz, but not by much. So at least for me, I'm going to stick with -O 2pz

The test I ran was simply running the simulator with the profiler, and using time simulation to speed it up and run for about 16 sim hours

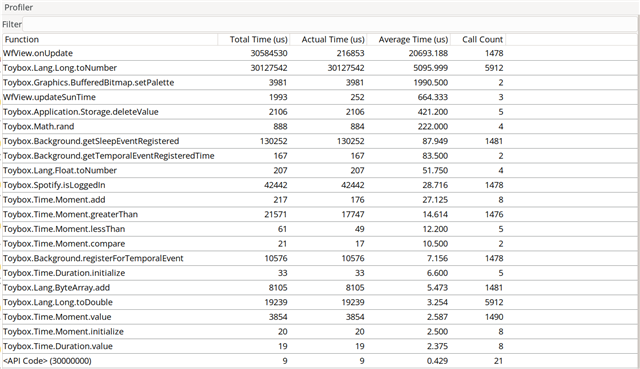

Here are my results with -O 3pz:

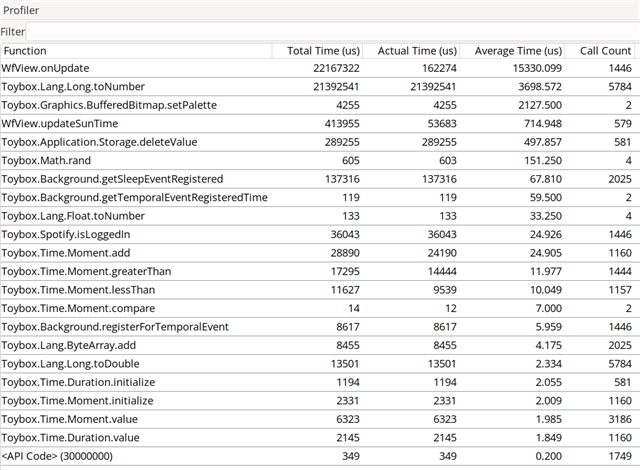

Here are my results with -O 2pz:

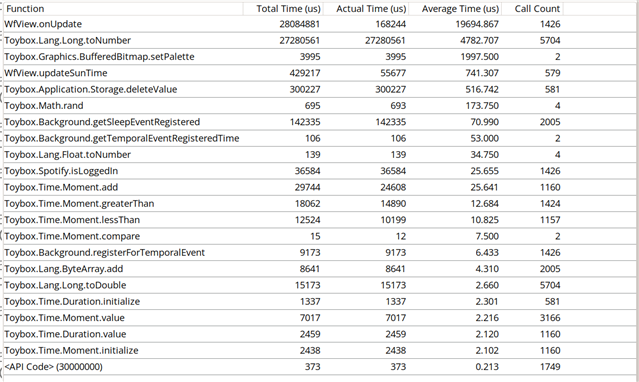

And here are my results with -O 1pz:

Has anyone else seen this in their apps/watchfaces? How big of a difference do the different options make for your code?