Sorry if this is confusing; I haven't slept in a few days. Long story short, I'm writing my first datafield and I'm pulling in SunCalc. For reasons too silly to get into, I am seeing divergent behavior with respect to precision of the data types used internally by the Moment class, when running on simulator vs on real hardware.

Consider the following (simplified) code, which originated in SunCalc:

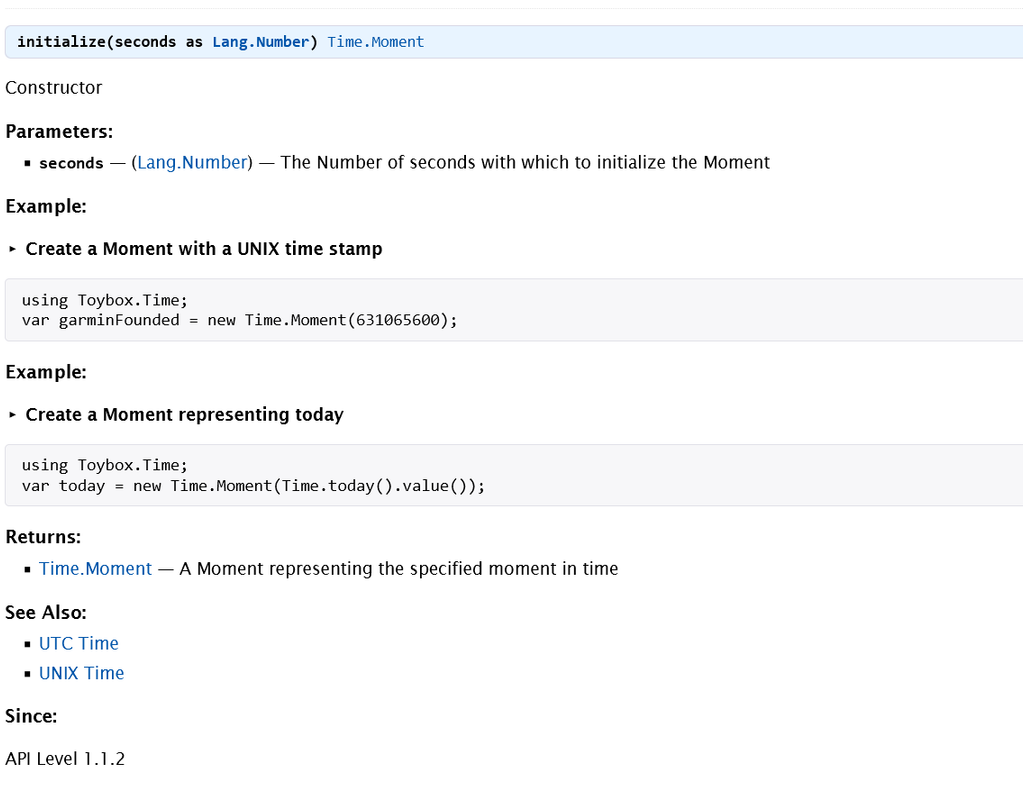

This constructs two Moment objects (via a wrapper function - this is important), subtracts them, and prints the delta. On real hardware and on sim (venu3 for both), the delta printed as being 2000, just like we expect.

Now consider the following minor modification:

We're now constructing Moments using fractional seconds, (which is what happens in SunCalc internally). I believe this will still print 2000 on real hardware (though I haven't re-tested the simplified version), while the simulator prints 2048.

There seems to be some monkey business going on. I'm guessing the simulator is using the underlying duck types to emulate a Moment, whereas real hardware implements Moments in native code, with more strict typing?? Needing a wrapper function to create a moment is important - if we simply call new Time.Moment(1234.5), we'll get a type error, but wrapping this operation into an intermediate function allows us to create a Moment based on fractional seconds (at least on sim, anyway) but on real HW the Moment will truncate the input argument to int?

Guessing we're probably getting 2048 on sim because we're ending up with a Moment whose timestamp is backed by a float instead of an int, so we're hitting loss of precision? But this is just a guess. Any input would be appreciated.

The trivial fix is to call .toNumber() in the wrapper function above (I'll send a PR to SunCalc later) but suspect I've stumbled on something more interesting here. I'm on Linux, CIQ SDK 6.4.1, Venu3 for sim and real HW.

Am I completely losing my mind here?

Thank you!