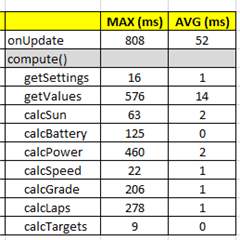

I added getTimer() calls before/after the logic functions in compute(). And calculated the max and avg duration of each call. About 5000 iterations. I avoided startup skew by waiting 10 secs before starting to collect the data.

Is it reasonable that the average duration of relatively complex compute logic functions take only 0-2 ms to run? Or is getTimer() not reliable on an Edge 1030 device? I know it isn't reliable in the simulator.

And it is interesting to me that the MAX durations are so much longer than the average. Not sure why such a huge variance between the average duration and max - what could cause, say, my calcSun (determines sunset and sunrise) to avg 2ms but at least once, took 125ms to complete?

I was also using a 126 mile turn-by-turn route during my ride, so possibly when I deviated from the route and it recalculated the route to offer a way to get back on route, that slowed the processing of the functions?