I'm making a web request and the weird thing is that I get a different responseCode in my code than in the traffic log.

I make a simple request like following:

function makeWebRequest(url, params, callback) {

var options = {

:method => Communications.HTTP_REQUEST_METHOD_GET,

:headers => {

"Content-Type" => Communications.REQUEST_CONTENT_TYPE_URL_ENCODED

},

:responseType => Communications.HTTP_RESPONSE_CONTENT_TYPE_JSON

};

Communications.makeWebRequest(url, params, options, callback);

}

function loadWeatherPreview(callback) {

BackgroundUtil.makeWebRequest(

"https://api.openweathermap.org/data/2.5/forecast",

{

"lat" => Props.get(Constants.PROP_LAST_LOC_LAT),

"lon" => Props.get(Constants.PROP_LAST_LOC_LONG),

"appid" => Props.get(Props.OWM_KEY),

"cnt" => 7,

"units" => "metric" // Celcius

},

callback

);

}

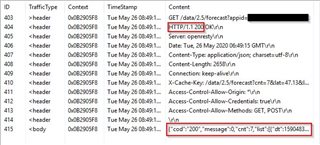

In my callback I get -403 as result code and an empty data value, but as you can see in the screenshot, the traffic log shows that I get result code 200.

How can this be?