Hi,

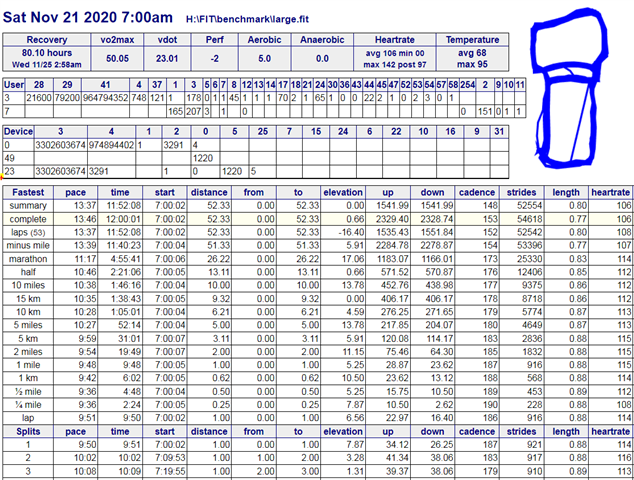

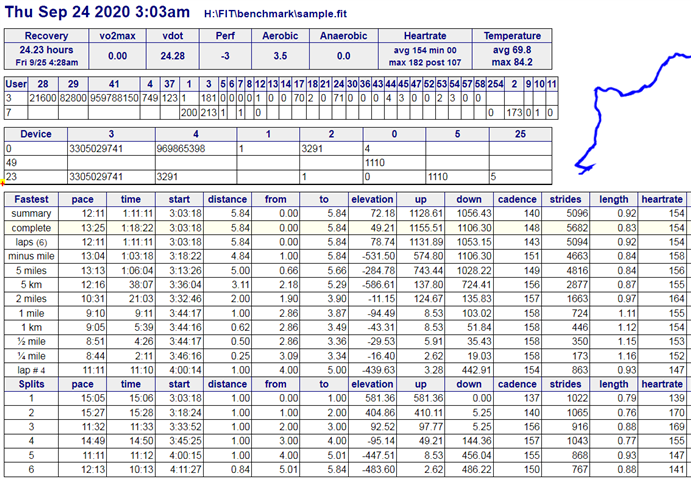

Just wanted to share, I started to put together a list of libraries that decode fit files and a simple benchmarking of the performance of each as for my own application performance is an issue.

Hopefully can be useful to others as reference, feel free to add other libraries or suggest other to look at.