When searching workouts, Garmin provides some that let you repeat a combination of sets for, say, 20 minutes. How could I make my own workout with a time limit?

When searching workouts, Garmin provides some that let you repeat a combination of sets for, say, 20 minutes. How could I make my own workout with a time limit?

some that let you repeat a combination of sets for, say, 20 minutes

Are you referring to the "Find a Workout" options with titles like "20-Minute Full Body Blast"? If so, that 20-minute label is just an estimate based on the sum of step durations and pacing assumptions. Under the hood, Garmin Connect doesn’t support repeat-until-time logic. You can repeat sets for a fixed number of rounds, and each step can have a time duration, but there’s no native way to loop a block dynamically for, say, 20 minutes. A possible workaround would be on your watch to set a 20-minute timer separate from the workout. When the timer goes off, end the workout, or "Skip Group" to go to the next group of sets.

If you'd like to see this feature added in the future, I recommend submitting the request on the Garmin Ideas page.

I am working in a German localization and if I select "10-Minuten-AMRAP mit eigenem Körpergewicht" where the "external timer" seems to be integrated. There you can do a sequence of execises for e.g. 10 minutes. Only, neither in the web interface nor in the app can I generate such a timed loop. Chat GPT thinks it can create an XML file that has the required elements but I couldn't manage to import that tcx into my Garmin Connect.

Bro chatgpt doesn't "think" anything, it always makes stuff up which happens to be what you want to hear. Sometimes what it makes up coincides with reality tho. The problem is that a human being still needs to validate what is being said. You can't really evaluate the output of chatgpt -- especially on complex/niche topics -- unless you are already an expert on the subject.

"Chat GPT thinks it can create an XML file that has the required elements but I couldn't manage to import that tcx into my Garmin Connect."

Take the above statement for example: Garmin workouts aren't specified as TCX or XML at all. If ChatGPT could actually think, it might say "I don't know how to do what you are asking". But because it can't think (and bc its creators want you to trust it and use it), it very confidently spouts nonsense in response to your prompt it spouts confident-sounding nonsense in response to your prompt.

And yes, you are correct that it seems the pre-programmed workouts have a feature where a group of exercises are limited by time, rather than number of repeats.

e.g.

As you've noticed, this is available with the pre-programmed workouts, but not the custom workouts that you create yourself.

Anyway here's how you can actually accomplish what you're trying to do. Sorry it's not as easy as typing a prompt into chatgpt. You will ofc need a computer. You will also need to use Chrome.

1) Download VS Code: https://code.visualstudio.com/

2) Install the following chrome extension: Share your Garmin Connect workout

3) Sign into the Connect website and create a new Cardio or Strength workout (Training & Planning > Workouts)

4a) Add a repeating sets/circuits group using the Add Sets / Add Circuits button

4b) Add the exercises you want to the repeating group

5) Save the workout and click the Download button (which is added by the extension). If the download button is not present, resize the Chrome window horizontally until it appears.

This will save the workout as a JSON file - e.g. "Strength Workout.json"

6) Open the JSON file in VS Code

In VS Code:

7) Open the command palette (CTRL-SHIFT-P / CMD-SHIFT-P) and select "Format Document"

8) Search for the following text (including quotes):

"conditionTypeKey": "iterations"

This should appear in a block of text which looks like this:

"endConditionValue": 2.0,

"preferredEndConditionUnit": null,

"endConditionCompare": null,

"endCondition": {

"conditionTypeId": 7,

"conditionTypeKey": "iterations",

"displayOrder": 7,

"displayable": false

},

"skipLastRestStep": null,

"smartRepeat": false

The above block of code represents a group which repeats 2 times.

Replace the above block of code with the following code:

"endConditionValue": 600,

"preferredEndConditionUnit": null,

"endConditionCompare": null,

"endCondition": {

"conditionTypeId": 2,

"conditionTypeKey": "time",

"displayOrder": 2,

"displayable": true

},

"smartRepeat": false

In this case, 600 means 600 seconds (10 minutes). Replace 600 with whatever time limit you want.

9) Repeat step 8 for all the groups that exist in your workout

10) Save the file - you can rename it if you want

In the Connect website:

11) Navigate to the list of all workouts: Training & Planning > Workouts

12) Click the Import Workout button, which is added by the extension

13) Select the file you saved in 10)

NOTE:

- you will no longer be able to edit the workout in the UI after modding it like this, so make sure you do this procedure after making all the other changes you want

- I didn't test the resulting workout on a device, but I have no reason to believe it won't work

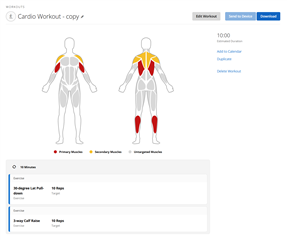

Here's an example of a cardio workout I modded to have a time limit (yeah I know it should be strength)

But because it can't think (and bc its creators want you to trust it and use it), it very confidently spouts nonsense in response to your prompt.

Very true. In most cases, you need to know a bit about the topic. AI will rarely respond with “I don’t know,” which then sends the unwitting user down a rabbit hole. It never admits defeat, and my personal pet peeve is the overly sycophantic responses.

Yeah, I find it interesting - and super annoying - that LLMs are now trusted *more* than human beings, because they spout authoritative-sounding text. Where do people imagine that text came from in the first place? All the original training data was generated by humans. But humans typically understand what they are talking about - and they typically know what they don't know. LLMs don't understand or know anything. They're just really fancy autocompletion engines.

If any of us were friends or coworkers with a human being who just constantly made up BS which was wrong a good percentage of the time, and they never once admitted that they didn't know something, that person would be probably be ostracized or fired. At the very least, people would soon learn to distrust (and dislike) that person. I mean there's a lot of words for a person like that: sociopath, liar, a--hole, etc.

But when the AI bros create chatbots which do this, we just give them endless amounts of cash and attention. Oh, and we start the process of replacing everyone and everything with them.

Oh btw, even after all of that, the AI bros are still not making a profit. Better throw a few more billion dollars at them.

AI will rarely respond with “I don’t know,” which then sends the unwitting user down a rabbit hole.

This only happens because people blindly trust LLMs in the first place. I can't believe how many times I've seen people referring to ChatGPT as if it's a really smart person, and expressing surprise that it told them some nonsense that isn't true. Ofc most ppl won't bother to fact check everything (or anything) ChatGPT says, as that would defeat the purpose of using it in the first place, right? (The purpose is laziness / saving money / eliminating people.) That's why we see all these news stories about lawyers and consulting companies getting in trouble for creating legal briefs and reports full of hallucinations.

Anyone who actually understands what LLMs are would realize that "hallucinations" are in fact all they ever generate. It just so happens that sometimes those hallucinations coincide with reality.

(This is why "hallucination" is such a misleading and insidious term - it implies that LLMs think just like human beings, and that when they happen to be incorrect, it's just an unlucky "hallucination". But when a human being hallucinates, we send them to the doctor because it's not normal. When an LLM hallucinates, it's not broken; that's just its normal mode of operation - you happened to be unlucky enough to get a hallucination that doesn't correspond to reality.)